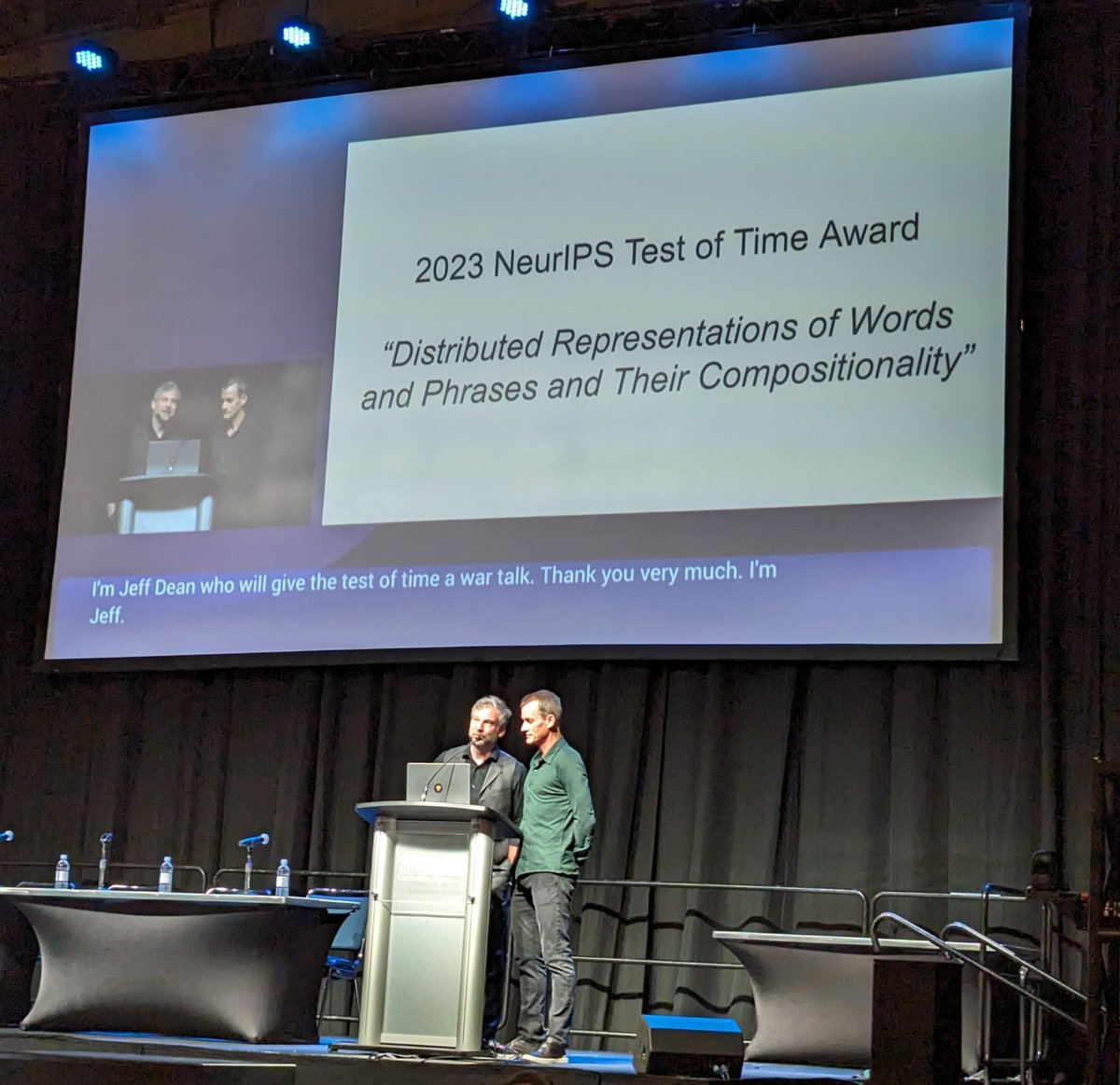

It has been 10 years since the release of the word2vec paper. Word2vec is arguably the first model (if we can even call it that, considering its simplicity—it consists of just two arrays of vectors) to demonstrate the power of distributed representation learning. The training process is surprisingly straightforward: it involves pulling each word's vector closer to those of other words in the same sentence, while simultaneously pushing it away from a few unrelated words not present in that sentence. By applying this process to a large corpus, we end up with vectors for semantically related words that roughly point in the same direction within a high-dimensional space. It's worth noting that all Large Language Models (LLMs) generate context-independent vectors, similar to those learned by word2vec, as part of their training. The models use these vectors to represent any input. Subsequently, they transform these into context-dependent vectors based on the sentence. Tomas Mikolov's contributions authorswithcode.org/researchers/?a…

It has been 10 years since the release of the word2vec paper. Word2vec is arguably the first model (if we can even call it that, considering its simplicity—it consists of just two arrays of vectors) to demonstrate the power of distributed representation learning. The training process is surprisingly straightforward: it involves pulling each word's vector closer to those of other words in the same sentence, while simultaneously pushing it away from a few unrelated words not present in that sentence. By applying this process to a large corpus, we end up with vectors for semantically related words that roughly point in the same direction within a high-dimensional space. It's worth noting that all Large Language Models (LLMs) generate context-independent vectors, similar to those learned by word2vec, as part of their training. The models use these vectors to represent any input. Subsequently, they transform these into context-dependent vectors based on the sentence. Tomas Mikolov's contributions authorswithcode.org/researchers/?a…