Our lab is thrilled to receive the @CapitalOne fellowship in support of our work on multimodal reasoning and explainability. Understanding how AI systems integrate and reason across multiple modalities is a critical step toward building more transparent, interpretable, and trustworthy models. A big thank you to our students and collaborators @uvadatascience and @precogatiiith!

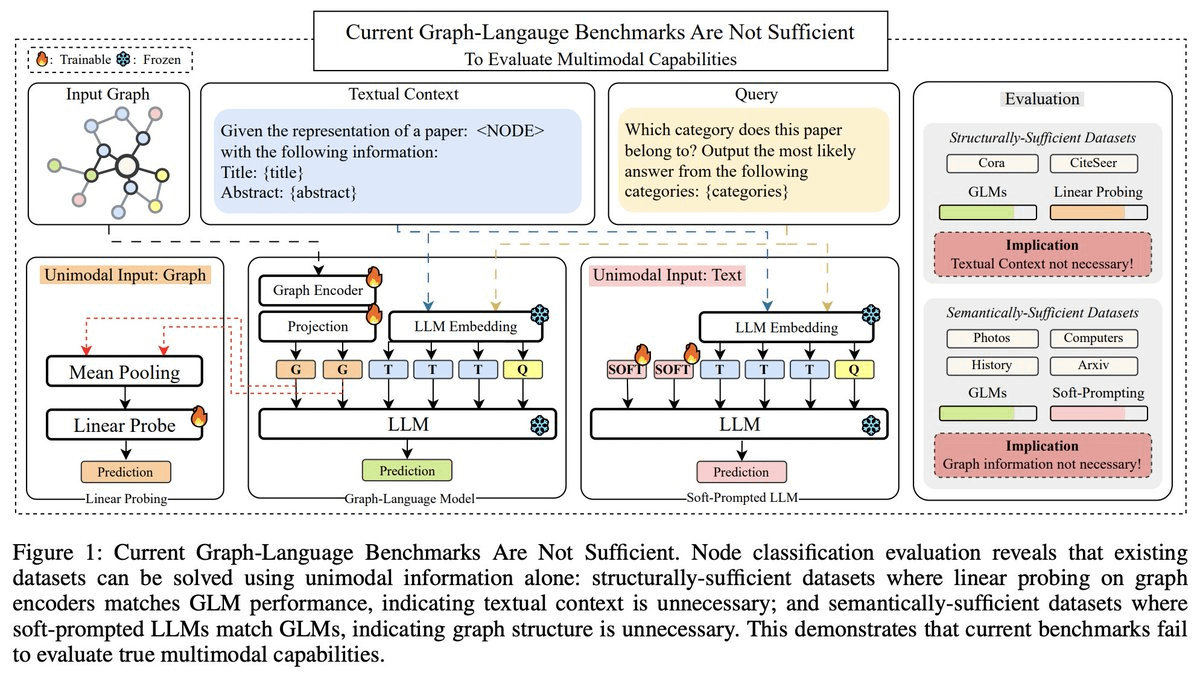

We are excited to share some early progress in this direction for multimodal graph-language models (GLMs) and reasoning! Our analysis using interpretability tools shows that graph encoders are basically equivalent to a full GLM and expose modality bias in current GLM benchmarks. Finally, we introduce CLEGR🚊 that comprises tasks demanding true compositional graph+language reasoning Work led by the awesome @ressurexn, @HariAakashK, Anirudh, and @akshitwt!

We are excited to share some early progress in this direction for multimodal graph-language models (GLMs) and reasoning! Our analysis using interpretability tools shows that graph encoders are basically equivalent to a full GLM and expose modality bias in current GLM benchmarks. Finally, we introduce CLEGR🚊 that comprises tasks demanding true compositional graph+language reasoning Work led by the awesome @ressurexn, @HariAakashK, Anirudh, and @akshitwt!