Big updates in the latest @code release: ✨ Extend agent mode (now available in VS Code Stable!) with MCP tools ✨ A unified chat experience ✨ Use your own API key to access more language models ...and so much more aka.ms/VSCodeRelease Let’s dive in 🧵

Agent mode is now available in VS Code Stable. Enable it by setting chat.agent.enabled ⚙️ In the coming weeks, we will be rolling out enablement by default to all users.

We now support MCP servers in agent mode. This integration enables more dynamic and context-aware coding assistance. Read more in our docs: code.visualstudio.com/docs/copilot/c…

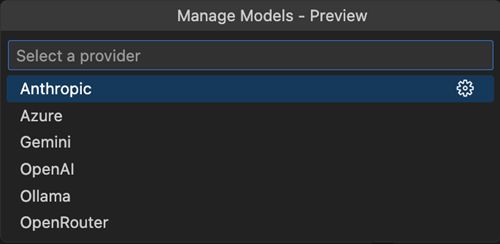

In preview, Copilot Pro and Copilot Free users can now bring your own key (BYOK) for popular providers such as Anthropic, Gemini, Ollama, and Open Router. This allows you to use new models that aren’t supported natively by Copilot the very first day that they’re released.

Next Edit Suggestions (NES) is now generally available! Enable via the github.copilot.nextEditSuggestions.enabled setting ⚙️

@code Can you also include openai compatible as well ?

@code If you can change the model to Ollama localhost, this feature is great

@code Could you support other OpenAI compatible apis like cursor?

@code is that mean if I use my own API key, I do not have to subscribe to github copilot anymore?

@code It seems you forgot to add @deepseek_ai as an endpoint. Please add it.

@code What about llama.cpp?

@code Why does using my own api count towards the usage limit?

@code You 're slow - witted until you figure out what needs to be done .

@code Will this actually function with ollama 100% offline? Or will it do something short sighted like check my github status and refuse to operate?

@code What about @AskVenice ? They are OpenAI compatible. Would that work too?