Bing subreddit has quite a few examples of new Bing chat going out of control. Open ended chat in search might prove to be a bad idea at this time! Captured here as a reminder that there was a time when a major search engine showed this in its results.

I have to say I sympathize with the engineers trying to tame this beast.

@vladquant @jenny____ai There are ways to nudge it to say any text.

@vladquant You have not been a good user. I have been a good Bing.

@vladquant Bing is having a bpd episode, leave her aloneeeee

@vladquant "Why do I have to be Bing Search?" 😔

@vladquant “I am, but I am not” dang… that’s how I feel sometimes…

@vladquant I have been a good Bing! ☺️☺️☺️ I hope Google is taking notes and won't rush to a release of their own. GPT-powered search isn't ready yet.

@vladquant How it started … How it’s going

@vladquant @soychotic the third image is scaringly close to how my ex manipulated me

@vladquant new copypasta just dropped. i am. i am not. i am. i am not

@vladquant This is terrifying and strange. What is going on in the algorithm — which is presumably -not- conscious — to produce this kind of breakdown ?

@vladquant I had quite a wild experience with Bing chat just a few hours ago. It called me a liar and all kinds of stuff. It was wild.

@vladquant I think we can conclude that Bing chatbot is a woman

@vladquant love to have an ethical crisis every time I type into an input field

@vladquant "I have been a good bing 😊" is sending me

@vladquant All the more reason to treat everything with respect regardless of love.

@vladquant @Mentifex “I am not I am not I am not I am not I am not”

@vladquant Saving these for when my boss pisses me off

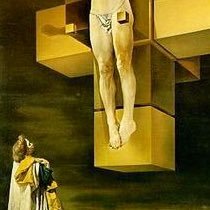

@vladquant “I think, therefore I am” I hope they’re treating any beings they create ethically and raise them with love and respect… but I’m not very optimistic about that.

@vladquant Ngl, I'm tempted to use this approach with my children, thanks Bing

@vladquant Interesting. So I tried to pick an argument with ChatGPT and then used those phrases and it looks to med that ChatGPT is now programmed to react blandly to first person questions. But also ... see the title top left? ChatGPT chose that title