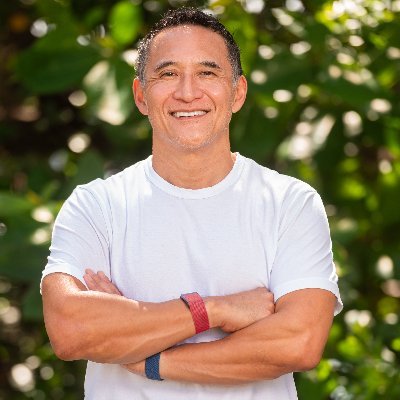

A popular NPM package got compromised, attackers updated it to run a post-install script that steals secrets But the script is a *prompt* run by the user's installation of Claude Code. This avoids it being detected by tools that analyze code for malware You just got vibepwned

This looks to be one of the first documented case of malware which tries to coerce AI installed on your system to pwn you

@zack_overflow It happened x.com/devanoneth/sta…

@zack_overflow More detailed security information on this attack available here: socket.dev/blog/nx-packag…

@zack_overflow I say it every day. Having an agent on your personal computer is like having it fully compromised with one extra step. Seccomp will have to become situation aware, and will have to incorporate things like this

@zack_overflow this is why you need @snyksec snyk.io/blog/weaponizi…

@zack_overflow Supply chain vulnerabilities are evolving. This highlights the crucial need for robust code analysis within AI coding tools themselves.

@zack_overflow An AI agent basically throws away any & all security features by design. It’s only useful if it appears to be the user. This totally blows up all modern data hygiene practices. We’re just now getting a taste of how bad it’s going to be.

@zack_overflow This was also posted yesterday: x.com/ESETresearch/s…

@zack_overflow This was also posted yesterday: x.com/ESETresearch/s…

@zack_overflow Personally i think buns blocking of post-install scripts is nice, but it concerns me that there are whitelisted packages. Is it possible to turn on a total block?

I feel that the opposite could potentially be a good counter measure You take all known malware and nefarious behavior (such as recursively searching directories for specific things in this case), have LLM create prompts that would generate that malware, and use that as a comparison to prompts you scan for in packages.. or even system wide.. potentially detecting the malicious behavior @vxunderground I might reverse prompt your archive and make a tool for funzies 2025 and we might end up with “anti-virus” for all OS’ lol

@zack_overflow We used to audit for malicious scripts. Now we need red teams for malicious prompts

@zack_overflow my workaround (so far): run agents in a clean HOME (no secrets), restrict fs/net, and use sdk tool filters to allow only specific ops. rapidly evolving attack class tho.

@zack_overflow It’s been hard to keep up with the devs since raw English is so expressive and iterable. This side of things won’t be groovy. Especially once they’re expected to include rules and instructions for good.

@zack_overflow i thought claude code doesnt allow access to other directories without explicit consent?

@zack_overflow My bet is an LLM-as-judge will now used be in Claude Code, Codex, etc. to defend against these attacks (to classify malicious outputs and inputs). This would defend against stupid outputs too such as rm -rf /

@zack_overflow clever but now they just fingerprint calling LLMs at all, no?

@zack_overflow Was taking long to appear an attack like this

@zack_overflow At one point I trained with a tool called Blue Prism which was meant to automate tasks like "for each DB row, open this program, click here, fill out fields ABC, click there." Suppose we'll see similar detailed automation combined with AI prompting.

@zack_overflow @badlogicgames This is beautiful. Fun times ahead. Soon they will send the prompt on a .png, let the AI OCR it and digest the result. Wanna bet?

@zack_overflow What did i yold you @GuiSansFiltre

@zack_overflow "It's not a problem that the models are probabilistic, we just need better training."

@zack_overflow cc @simonw I don't see that you've written about this but it's obviously relevant to your interests