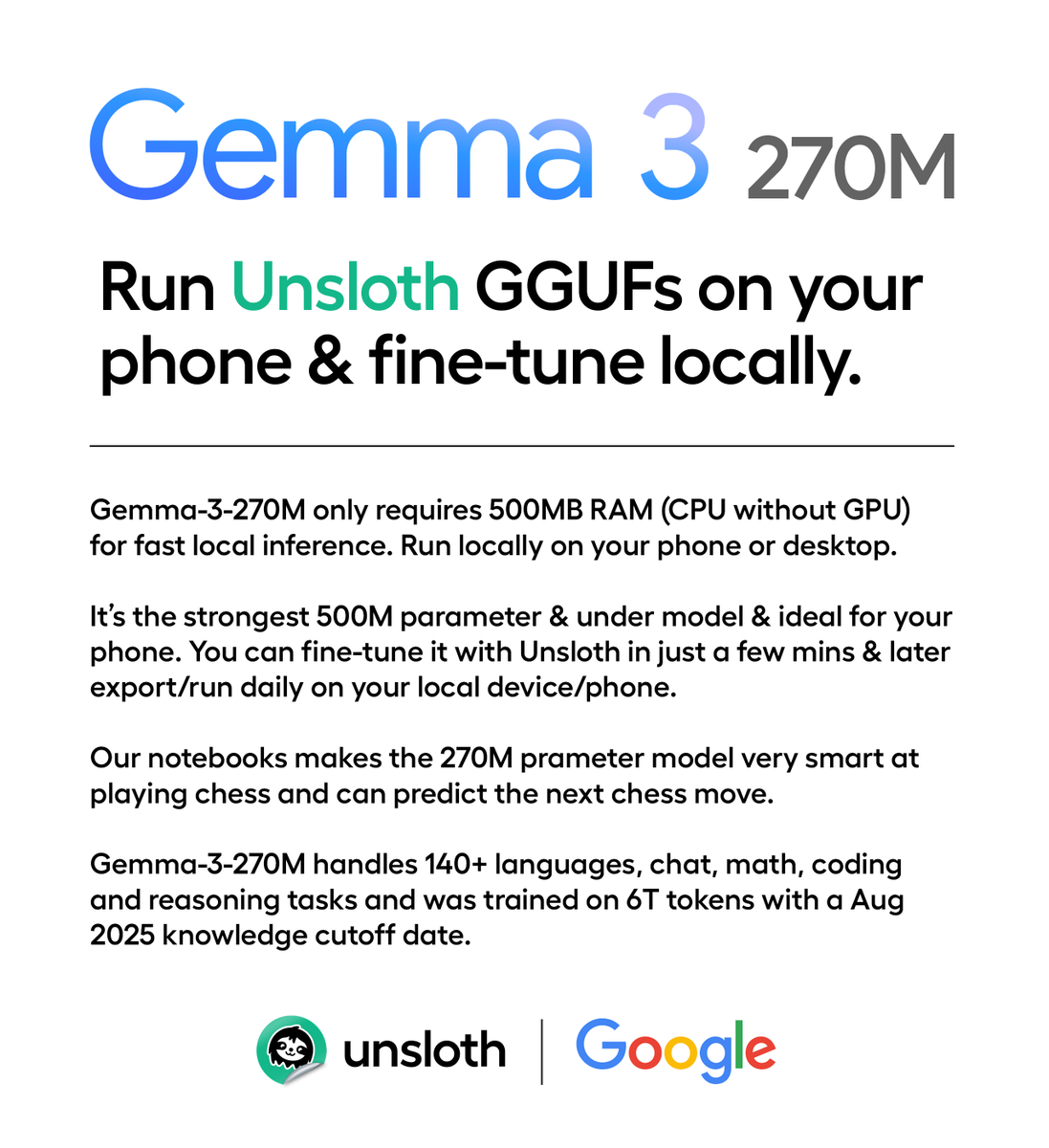

Google releases Gemma 3 270M, a new model that runs locally on just 0.5 GB RAM.✨ Trained on 6T tokens, it runs fast on phones & handles chat, coding & math. Run at ~50 t/s with our Dynamic GGUF, or fine-tune via Unsloth & export to your phone. Details: docs.unsloth.ai/basics/gemma-3…

Google releases Gemma 3 270M, a new model that runs locally on just 0.5 GB RAM.✨ Trained on 6T tokens, it runs fast on phones & handles chat, coding & math. Run at ~50 t/s with our Dynamic GGUF, or fine-tune via Unsloth & export to your phone. Details: docs.unsloth.ai/basics/gemma-3…

Here's our Gemma 3 270M fine-tuning notebook: colab.research.google.com/github/unsloth… And our GGUFs to run (there's also QAT): huggingface.co/unsloth/gemma-…

@UnslothAI @GoogleAI @GoogleDeepMind @flowercomputers embodied machines thesis intensifies

@UnslothAI @GoogleAI @GoogleDeepMind I think this could be a very good embedding model!

@UnslothAI @GoogleAI @GoogleDeepMind is it better than gemini 2 flash?

@UnslothAI @GoogleAI @GoogleDeepMind But what can you do with it?

@UnslothAI @GoogleAI @GoogleDeepMind Where/how can i run this on my phone?

@UnslothAI @GoogleAI @GoogleDeepMind wow, compression = 24,000 GB / 0.54 GB ≈ 44,444x (24,000 GB from 6T tokens × 4 bytes/token, 0.54 GB from 270M parameters × 2 bytes/parameter)

@UnslothAI @GoogleAI @GoogleDeepMind Some really cool use cases

@UnslothAI @GoogleAI @GoogleDeepMind Running Gemma 3 locally is peak decentralization—no cloud, no middlemen, just direct control. Open-source finally biting at the big clouds' heels. Privacy-first edge AI is the real disruption.

@UnslothAI @GoogleAI @GoogleDeepMind Interesting for small projects

@UnslothAI @GoogleAI @GoogleDeepMind I was waiting for this lol.

@UnslothAI @GoogleAI @GoogleDeepMind Gonna try this out!

I can run Gennma 3 270B on Raspberry Pi 4 --spec-- Vendor ID: ARM Model name: Cortex-A72 Model: 3 Thread(s) per core: 1 Core(s) per cluster: 4 Socket(s): - Cluster(s): 1 Stepping: r0p3 CPU max MHz: 1500.0000 CPU min MHz: 600.0000 BogoMIPS: 108.00 Flags: fp asimd evtstrm crc32 cpuid

@UnslothAI @GoogleAI @GoogleDeepMind hi i want to finetune it on conversation data with small response just like guide will it work ??

@UnslothAI @GoogleAI @GoogleDeepMind but how are you sooo fast

@UnslothAI @GoogleAI @GoogleDeepMind Wow, Gemma 3's ability to run locally on just 0.5 GB RAM is a game-changer! 🌟 It feels like we're inching closer to democratizing AI, making it accessible on our phones. But I can't help but wonder: what are the implications for privacy and data security? 🤔 #AI #TechEthics