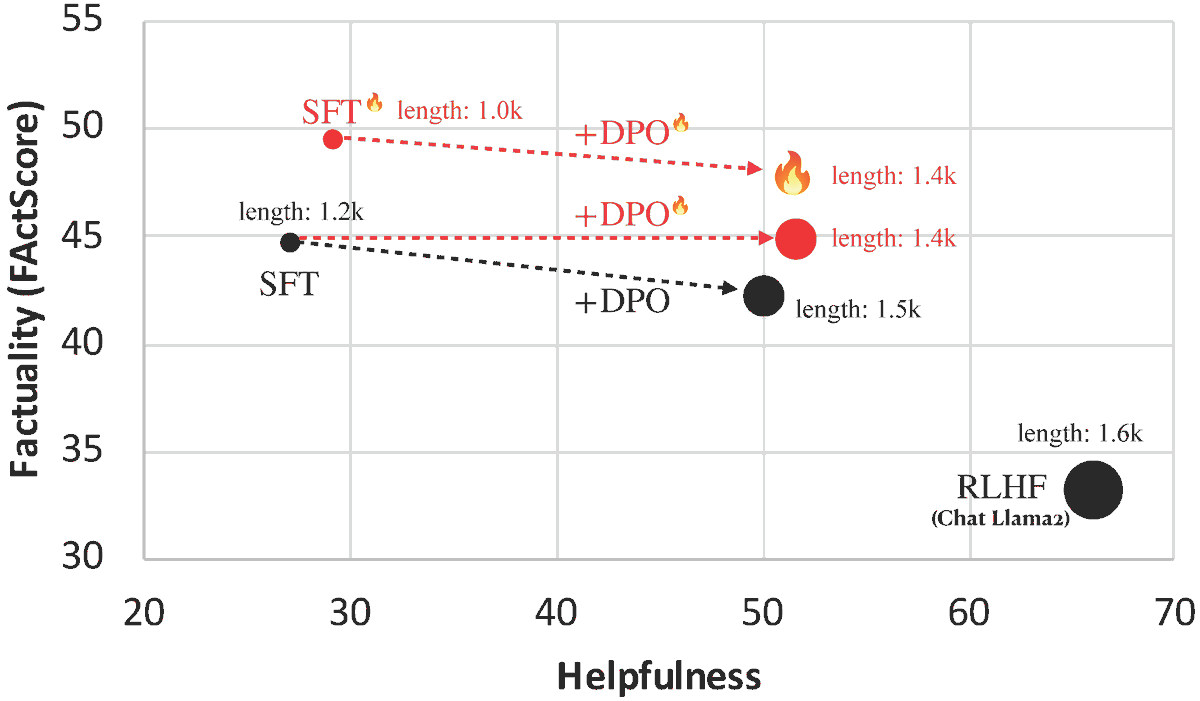

Introducing FLAME🔥: Factuality-Aware Alignment for LLMs We found that the standard alignment process **encourages** hallucination. We hence propose factuality-aware alignment while maintaining the LLM's general instruction-following capability. arxiv.org/abs/2405.01525

@jacklin_64 @luyu_gao @XiongWenhan @scottyih We first identify factors that lead to hallucination in both alignment steps: supervised fine-tuning (SFT) and reinforcement learning (RL). In particular, we find that training the LLM on new or unfamiliar knowledge can encourage hallucination. (2/n)

@ccsasuke @jacklin_64 @luyu_gao @XiongWenhan @scottyih Good read, looking forward to more detailed analysis from you

@ccsasuke @jacklin_64 @luyu_gao @XiongWenhan @scottyih This is cool. However, I suspect it will take more than alignment to solve this - here's my take on addressing the fundamental sampling problem at source👇 x.com/realcoffeeAI/s…

@ccsasuke @jacklin_64 @luyu_gao @XiongWenhan @scottyih This is cool. However, I suspect it will take more than alignment to solve this - here's my take on addressing the fundamental sampling problem at source👇 x.com/realcoffeeAI/s…