The wait is over! As the leading AI code review tool, CodeRabbit was given early access to OpenAI's GPT-5 model to evaluate the LLM's ability to reason through and find errors in complex codebases! Our evals found GPT-5 performed up to 190% better than other leading models!

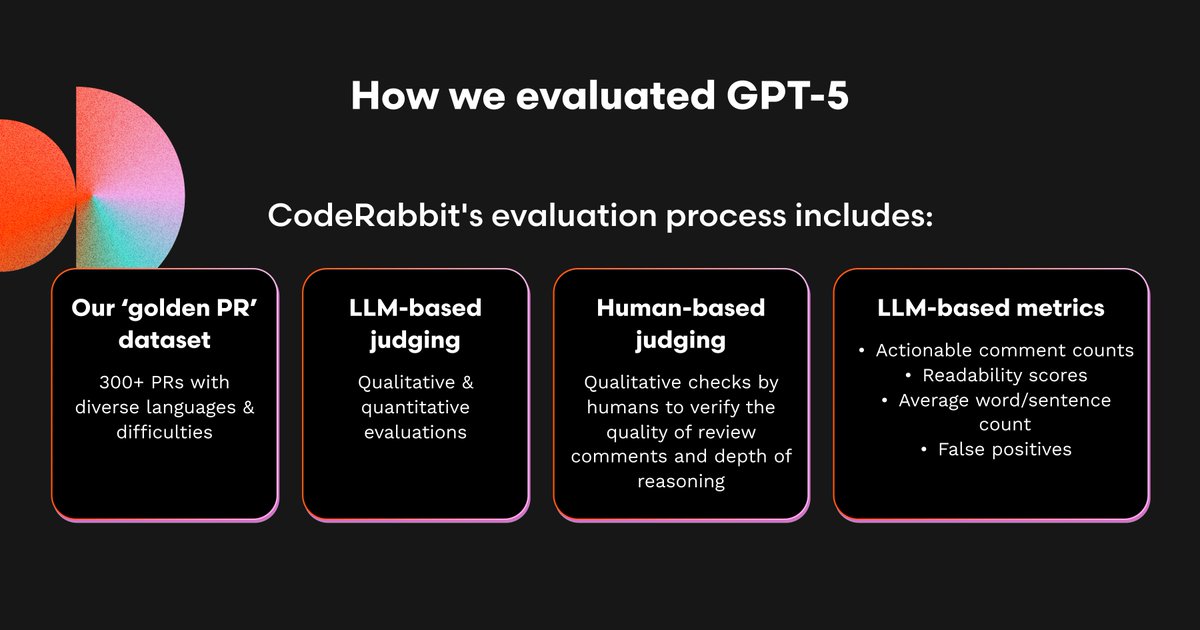

As part of our GPT-5 testing, we conducted extensive evals to uncover the model’s technical nuances, capabilities, and use cases around common code review tasks using over 300 carefully selected PRs.

Across the whole dataset, GPT-5 outperformed Opus-4, Sonnet-4, and OpenAI's O3 on a battery of 300 varying difficulty, error diverse pull requests – representing a 22%-30% improvement over other models

We then tested GPT-5 on the hardest 200 PRs to see how much better it did on particularly hard to spot issues and bugs. It found 157 out of 200 bugs where other models found between 108 and 117. That represents a 34%-45% improvement!

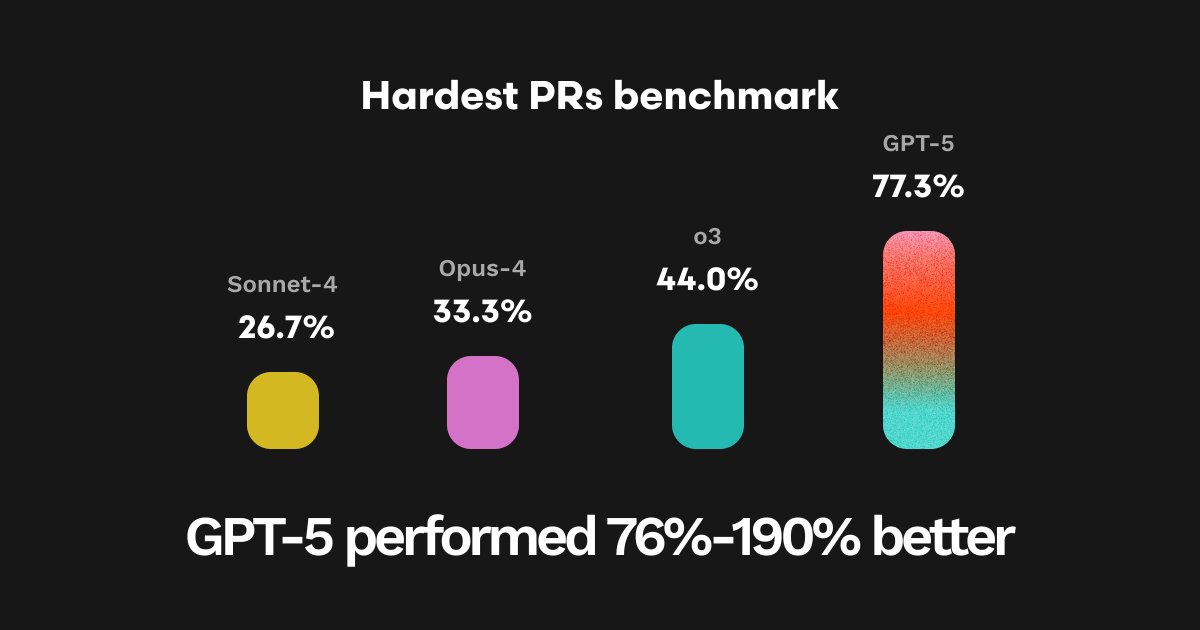

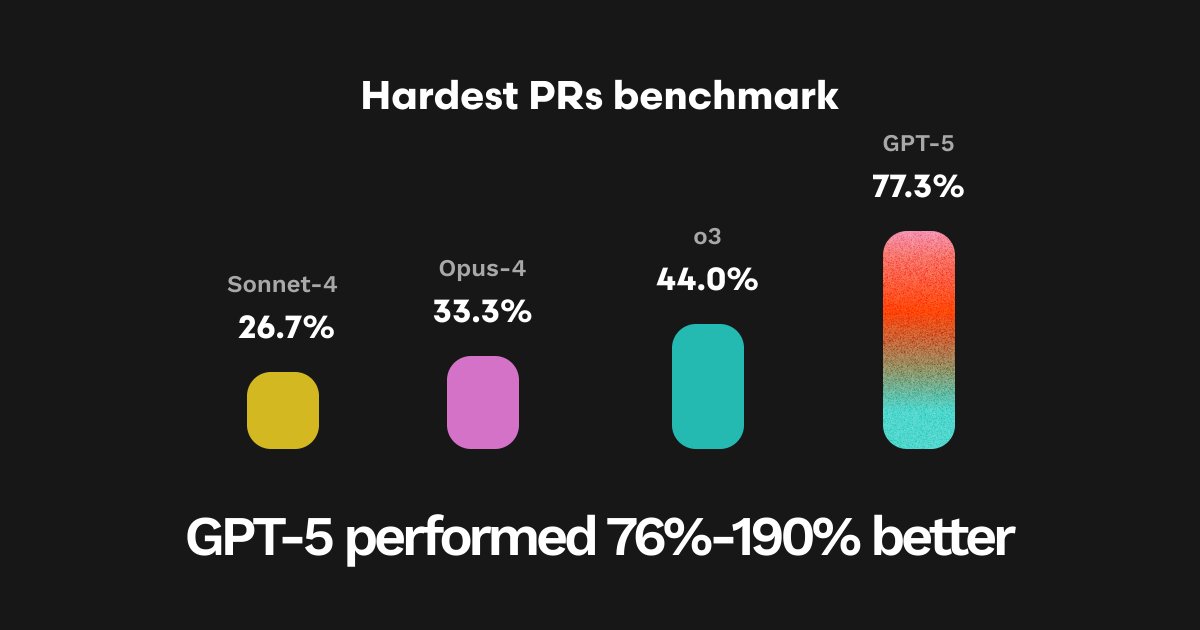

On our 25 hardest PRs from our evaluation dataset, GPT-5 achieved the highest ever overall pass rate (77.3%), representing a 190% improvement over Sonnet-4, 132% over Opus-4, and 76% over O3.